Every organization today runs on the cloud. From startups to global enterprises, the cloud has become the default foundation of innovation. We have the best software engineers building scalable systems. Dedicated FinOps teams analyzing every dollar. World-class monitoring tools visualizing every metric. And yet, despite all this sophistication, one theme continues to echo across leadership reviews:

“We’re spending too much on the cloud.”

Budgets are tightening, CFOs are asking tougher questions, and the answers are getting harder to find. The rise of AI has only amplified this challenge, as Large Language Models (LLMs) bring with them unpredictable and often opaque consumption patterns. Consider a simple vector database query that isn’t optimized. Perhaps it retrieves more embeddings than necessary or runs repeatedly without caching. On the surface, it looks harmless; it is just another API call in a sea of data operations. But under the hood, it can trigger massive compute workloads, balloon storage requirements, and rack up thousands of dollars overnight. Multiply that by dozens of teams experimenting with AI features, and suddenly the CFO’s question “Why did our bill spike last week?” becomes a lot harder to answer.

Observability tools helped us understand what’s happening, but their own costs are rising too. The very systems designed to bring clarity are now becoming part of the financial puzzle. Every log, trace, and metric we collect carries a cost. As teams instrument more services and expand their monitoring coverage, the data ingested by observability platforms multiplies exponentially. This means more storage, more retention, and more compute to process insights. Each of it adds another layer to the bill. Ironically, the deeper we look for visibility, the more expensive that visibility becomes.

Every new tool, every dashboard, every FinOps practice promised better control. Yet somehow, the problem keeps evolving faster than the solutions. Observability and FinOps have grown into powerful disciplines. One has mastered resilience, and the other accountability. But we still struggle to answer the basic question: where does our money actually go, and how can we control it without stalling innovation? The answer to this lies in understanding how our teams operate.

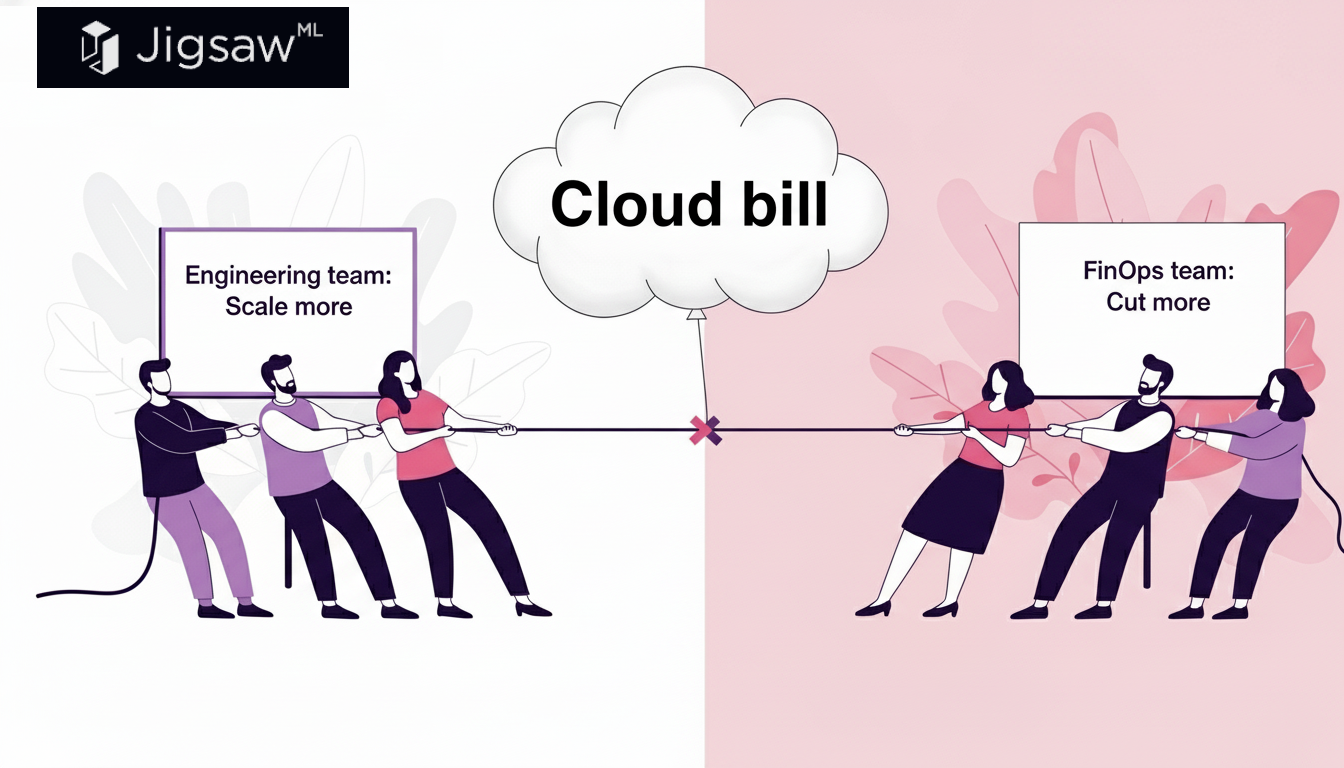

Two teams, two different scoreboards:

Every team in the modern organization operates by an invisible scoreboard. The unspoken metric that defines what “winning” looks like. Understanding these competing scoreboards is key to understanding why cloud cost optimization feels impossible.

For Engineers:

The scoreboard is system performance. Is the system fast? Is uptime rock solid? Is the code quality great? etc., Their world revolves around speed, scalability, and innovation. When a service goes down at 2 a.m., the engineer’s first thought isn’t “What’s the cheapest fix?” It’s “What’s the fastest and most reliable fix?”

Engineers build systems to last, and that often means overprovisioning, adding redundancy, and choosing reliability over thrift. Their scoreboard is uptime, not budget variance.

For FinOps teams:

The scoreboard looks completely different. They’re not rewarded for uptime or velocity; they’re rewarded for cost efficiency. Their day starts with spreadsheets, anomaly alerts, and dashboards that light up in red when budgets are breached. They dive into usage reports, trace every spike, and chase down cost centers like detectives solving a case.

Where engineers see systems, FinOps sees spend.

Where engineers see elasticity, FinOps sees volatility.

Both sides are doing their jobs. Both are optimizing for success. But they’re optimizing in different directions. This is where the friction begins: a silent tug-of-war.

The invisible tug of war:

Consider a common scenario:

As Q4 approaches, the engineering team ramps up its API service to handle the holiday traffic spike. They provision extra compute, storage, and redundancy, all good decisions from a reliability standpoint. Meanwhile, the FinOps team spots a sudden 40% increase in EC2 usage. To them, this looks like waste, maybe a forgotten autoscaling group, maybe inefficiency. They flag it, question it, and ask engineering to justify it. Now, instead of collaboration, what you have is tension. FinOps feels unheard. Engineering feels micromanaged. And leadership? They’re stuck in the middle, forced to make tradeoffs between speed and savings.

This invisible tug-of-war happens every day across modern cloud organizations. The more teams specialize, the more disconnected they become. The irony is that both sides want the same thing: a fast, reliable, cost-efficient business. But the system they operate in isn’t designed for alignment.

When AI enters, the complexity explodes

Just when organizations were learning to balance engineering and FinOps, AI workloads entered the scene and changed the rules completely. Even a single AI API can quickly become a significant cost driver.

Consider a chatbot integrated with the ChatGPT API. During testing, a few thousand queries appeared manageable and well within budget. But when the bot went live in production, user demand surged, caching wasn’t implemented correctly, and API calls multiplied across sessions. Each request consumes compute resources proportional to the model’s complexity and input size, with accompanying vector database lookups further amplifying costs. What seemed trivial in testing suddenly translates into thousands of dollars spent overnight.

Neither the Engineering team nor the FinOps team anticipated this level of spike, and this mix brews a perfect storm, and we are currently in the below state:

1. Engineers without cost awareness

From an engineering perspective, this is an operational challenge, not a cost problem. Their culture emphasizes experimentation and rapid deployment, leaving cost considerations as an afterthought. No engineer can anticipate every usage spike across millions of requests, nor foresee the interplay of model complexity, user input, and data retrieval. Even deliberate scaling decisions like enabling autoscaling can inadvertently drive massive costs. The dynamic, evolving nature of AI workloads exceeds the limits of traditional engineering oversight.

2. FinOps with no control

FinOps teams, traditionally built around predictable cost models, now face an unprecedented challenge. A single API call can generate costs across multiple services, accounts, and cloud regions, often without proper tagging or ownership context. Micro-spikes multiply across concurrent experiments, making attribution difficult. While FinOps tools provide visibility, they were never designed to manage unpredictable, AI-driven workloads in real time. Analysts can flag anomalies after the fact, but they cannot proactively prevent runaway spend without deep engineering context, which is often unavailable.

3. The business dilemma

The result is a perfect storm: traditional engineering and FinOps practices alone cannot keep pace. Engineers cannot anticipate every scenario, FinOps cannot track every spike, and leadership is left asking: How can a business user make sense of this spend? Was this investment worthwhile?

The limits of dashboards and alerts:

Here’s the uncomfortable truth: Organizations aren’t struggling due to a lack of visibility. They’re struggling because of how we interpret and act on that visibility.

Modern enterprises are flooded with dashboards, billing alerts, anomaly detectors, cloud spend explorers, and AI-powered insights. And yet, none of these tools could bring alignment between the teams, and they cannot help us to answer the fundamental question: Is this expense truly justified for the business?

Metrics and alerts show spikes, anomalies, or overages, but they rarely convey context, intent, or business impact. As a result, teams interpret the same data differently, often pulling in opposite directions. In practice, this situation leads to friction and inefficiency in Organizations.

FinOps may flag an unexpected cost and reject the budget, focusing on discipline and predictability. Engineers, who understand the operational need behind the expense, ask for additional resources to ensure performance and reliability. Leadership, caught between the two, often approves the budget. This is not because the cost was fully understood or justified, but because the organization lacks a common framework to reason about trade-offs. This cycle repeats endlessly, producing analysis paralysis, reactive spending, and misalignment, all while the underlying system inefficiencies remain unaddressed.

The million-dollar question: How do we create alignment across teams?

When teams are operating in silos, alignment becomes a significant challenge. Consider a typical scenario: a Product Manager is focused on launching a new AI-powered feature quickly to satisfy user demand. Engineers are focused on system performance, reliability, and scalability, ensuring the feature works under peak load. Business leadership is primarily concerned with revenue impact, adoption metrics, and overall ROI. Meanwhile, the FinOps team is tracking cloud spend and is alarmed by the projected costs of running high-volume inference pipelines or API calls. Each team is working toward what they see as “success,” but their metrics, priorities, and incentives are different and sometimes conflicting. Without a shared framework, these teams often struggle to operate cohesively, leading to friction, misaligned priorities, and inefficient decision-making.

So what does it take to achieve true alignment?

A cultural overhaul, process redesign, redefined KPIs, or perhaps even the creation of a new team altogether? In practice, implementing all of these is neither simple nor quick.

Changing culture requires consistent effort over months or even years, building trust, encouraging cross-team collaboration, and fostering shared accountability. Redesigning processes involves mapping workflows, identifying gaps, and establishing new routines that integrate financial, operational, and business perspectives. Re-defining KPIs is similarly complex, as it demands that organizations shift from measuring isolated outputs to evaluating outcomes that balance cost, performance, and business value. And if new roles or teams are introduced, hiring, onboarding, and embedding them into existing structures adds yet another layer of time and effort.

So, how can organizations solve this problem more effectively? How can we redefine the problem space for the new era, ensuring that cost, performance, and business value are considered together rather than in isolation?

The new need: Shared mental model

To truly solve the cloud cost problem, organizations need to move beyond tools, dashboards, and alerts and focus on building a shared mental model that illustrates how architecture, performance, and costs interact. This model will serve as a common reference point, helping teams answer critical questions:

- What business feature drives this cost?

- Which team owns it?

- What trade-offs exist between speed, cost, and reliability?

- How do architectural decisions translate into financial outcomes?

- What’s the cost of this outage?

By mapping technical and financial dimensions together, the mental model provides clarity and context that isolated dashboards and reports cannot.

When teams operate from the same mental model, decisions become contextual rather than confrontational. Engineers gain visibility into the cost implications of their design choices. FinOps understands the technical intent behind resource usage. Leadership can evaluate spending in terms of real business value rather than abstract numbers.

Without this shared view, organizations remain trapped in a cycle of reactive optimization: alerts trigger escalations, engineers make defensive changes, budgets get repeatedly debated, and frustration mounts across all levels. The mental model shifts focus from chasing metrics to making informed, aligned decisions that balance cost, performance, and business outcomes.

Leave a Reply