Every modern enterprise operates on a foundation of distributed, interdependent systems spanning thousands of microservices, APIs, data pipelines, and infrastructure components. Whether it’s a login, a search, a purchase, or a recommendation, every user interaction triggers a complex choreography of services owned by different teams. Beneath the surface of every click lies a complex choreography of services owned by different teams, deployed across multiple clouds, and governed by distinct operational practices. What once existed as a single monolithic application has now evolved into an ecosystem of loosely coupled parts, each optimized for speed, scalability, and independence yet collectively responsible for the company’s most fundamental promise: reliability and performance.

Consider a seemingly simple user event, say, a customer unable to log in. For the engineer on call, it manifests as an API timeout or authentication error in the logs. For operations, it’s a reliability incident affecting uptime. For the product manager, it signals friction in a critical user journey that could hurt engagement metrics. Finance sees it differently: a potential cost exposure as infrastructure scales up in response to retries and failed sessions. For the VP of

Engineering, it’s a risk to deliver commitments; for the CFO, it’s a potential drain on operational efficiency and brand trust. The same event, viewed through multiple lenses, becomes a collage of interpretations, each valid in isolation, yet disconnected in meaning.

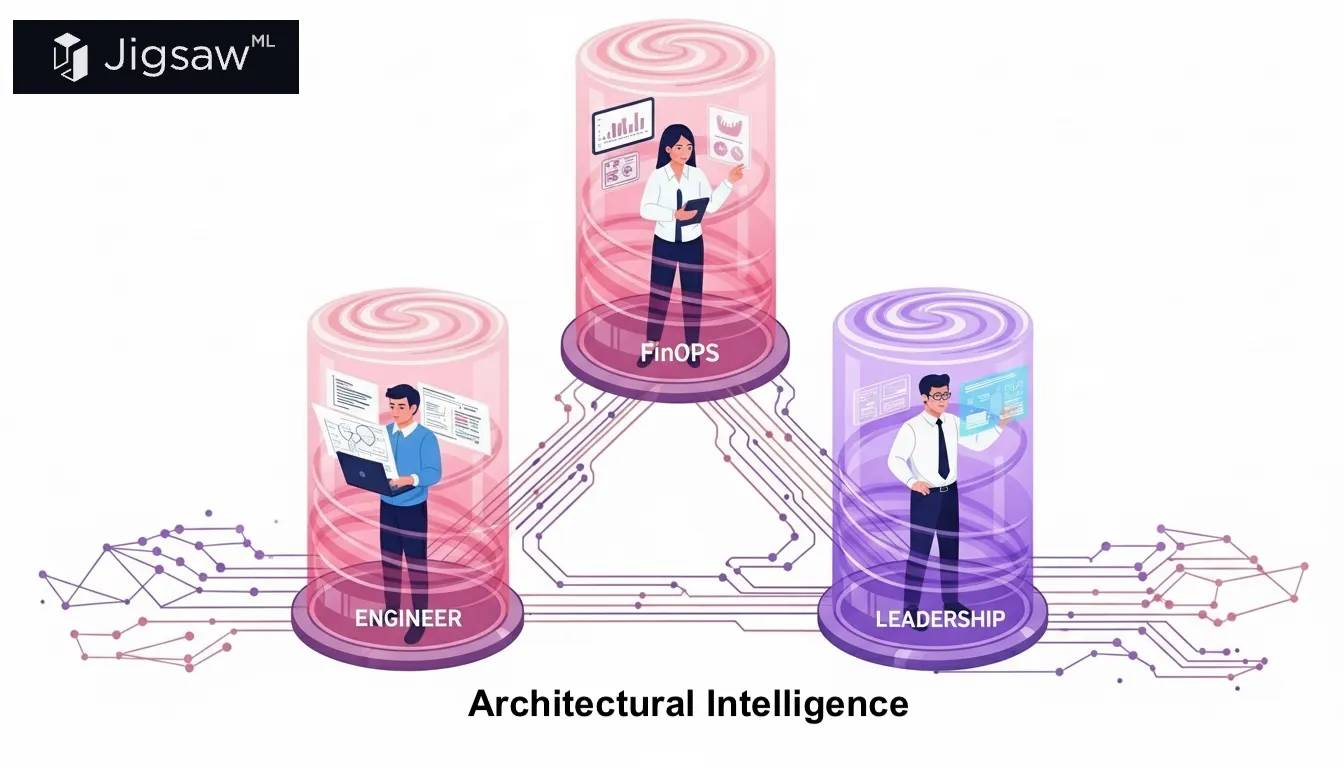

This is the paradox of modern visibility. We have specialized teams, specialized tools, and specialized dashboards, each providing precision in one dimension but blindness in others. Engineers see system metrics without cost context. FinOps sees cloud spend without an architectural context. Product managers see feature adoption without infrastructure context. Our collective specialization, while powerful, has fractured our understanding of how systems behave as a living whole. What enterprises face today is not a lack of data, but the absence of a shared mental model that could be plugged and played into every Organization.

We explored the foundations of this shared mental model recently. In this post, we will focus on how to construct this mental model and why observability companies are uniquely positioned to bring it to life.

Architecture as the shared mental model

That shared mental model is architecture.

Architecture sits at the intersection of all disciplines; it is where cost, performance, reliability, and risk converge into one coherent system. When people from different functions look at the same architectural model, they stop debating numbers and start discussing relationships: how one change affects another, how trade-offs ripple across the stack. Architecture diagrams provide the shared mental model that allows teams to reason collectively, not competitively.

It’s also important to recognize the cognitive power of diagramming. Humans understand systems spatially. We make sense of complexity through visual structure. A well-crafted architectural diagram does far more than list components; it externalizes thought. It converts tribal knowledge into shared clarity. It lowers cognitive load by revealing connections, dependencies, and flows in a single, navigable frame.

Diagrams turn abstract systems into tangible landscapes that anyone, engineers, product leaders, even CFOs can reason about. Architecture becomes the foundation upon which every other insight depends. When people can see how their world fits together, alignment stops being an aspiration and becomes an instinct.

The evolution of Observability:

The past decade has delivered remarkable progress in the disciplines of visibility. Observability platforms gave engineers unprecedented insight into application behavior through metrics, events, logs, and traces (MELT). FinOps frameworks introduced financial accountability to the once opaque world of cloud spending. Security and compliance systems brought rigor to vulnerability detection, access control, and policy enforcement. Each discipline matured rapidly, shaped by genuine need and remarkable innovation.

The problem is that all these advancements evolved in parallel. Each toolset answered a narrow, domain-specific question: Is the service healthy? What does this resource cost? Is this dependency secure? What they could not explain is how these dimensions influence one another or how a performance optimization changes cost dynamics, or how a configuration choice impacts reliability, or how architectural drift silently expands the attack surface.

Enterprises, in response, built layers of specialized dashboards, workflows, and review cadences, each optimized for its audience, none truly connected to the others.

1. What Observability lacks

Observability, despite all its strengths, stops short of delivering context. It tells us what happened – CPU spikes, slow queries, and latency surges, but not why they happened or how those events connect across the broader system. Observability platforms operate as powerful microscopes, revealing granular insights but often missing the architectural forest for the trees. As environments grow more distributed and AI workloads further blur operational boundaries, this lack of architectural understanding becomes a critical blind spot. Without knowing how systems, teams, and costs interrelate, observability data remains reactive, valuable, but incomplete.

2. The shift to cost-aware Observability

Gartner’s emerging trendlines are clear: the next generation of observability will be defined by companies that extend beyond metrics, events, logs, and traces into AI-powered, cost-aware observability. As enterprises face growing complexity in AI and cloud-native environments, observability vendors have an opportunity and a responsibility to evolve. Integrating Architectural knowledge provides that evolutionary leap. It adds the missing vision layer, mapping how services, dependencies, data flows, and ownership structures connect. For observability providers, this means their tools can move from passively collecting telemetry to actively interpreting it in an architectural context. By embedding architectural maps directly within their platforms, observability companies can offer an experience that doesn’t just show behavior, it explains it.

3. Observability meets Architecture

When observability integrates with Architectural knowledge, it could create a unified system of understanding across technical and business teams. Imagine a world where a latency spike in a database instantly correlates with its dependent microservices, the teams that own them, and the cost implications of each fix. Observability tools are currently used by multiple personas in an Organization.

● IT Ops teams use Observability platforms to monitor live production environments.

● Platform engineering teams use Observability platforms to ensure that their software developments meets the service-level-objectives

● Software Engineers use Observability platforms as an integrated part of CICD pipelines thereby using it to get rapid feedback on code deployments.

● Business Analysts use observability platforms to understand and analyze key metrics.

Having Architectural knowledge on their suite poses a great possibility to enrich new personas too.

● FinOps teams can now evaluate efficiency alongside reliability, and engineers can visualize cause and effect in a single pane.

● Architects/Engineering Managers can evaluate their technical architecture vs cost decisions.

The result will be a new class of context-aware observability, one that connects performance, cost, and ownership in real time. By adopting Architectural knowledge, observability vendors not only future-proof their platforms but also redefine their value proposition: from monitoring what breaks, to illuminating how everything connects.

Conclusion: When Architecture becomes alive

For years, Observability has told us what happened. But in today’s AI-driven, cloud-native world, that’s no longer enough. You can’t run a modern enterprise with half the picture.

Real control requires understanding the system itself: its structure, its dependencies, its hidden pathways. The system isn’t static. It’s alive. And yet our architecture diagrams are frozen in time and are outdated the moment they’re drawn.

Now imagine this:

What if the Architecture diagrams could be drawn from your code base in real-time? And amplified with AI to interpret changes and surface insights that were never possible? Fuse that with Observability, and suddenly, we can solve problems at the speed of our system.

This is the leap from looking at data to understanding the entire digital organism.

That will be the power of real AI – Architectural Intelligence.

Leave a Reply